Mediapipe提供了在cpu低延时下的高性能以及丰富功能,比如人脸识别,手部跟踪与识别,全身关节跟踪与识别,如果我们能在我们的应用中加入这些功能岂不是很酷!

在python环境下,我们可以通过直接安装Mediapipe包来使用Mediapipe功能,但是我们的桌面应用如果想使用Mediapipe是不是还要集成python环境下的Mediapipe,然后通过本机通讯调用呢?有没有简单的低耦合的方法?

如果我们将Mediapipe中的某个模块编译成C++,并通过动态链接库的方式调用接口以达到功能封装,随处可用的目的,再也不需要关心如何进行C++ - python,C# - python等等跨语言调用的问题。

下面将阐述如何将Mediapipe中的手部跟踪与识别模块封装成动态链接库,大家可以从这个例子得到启发进而封装Medpipe的其他模块。

2 Mediapipe编译环境搭建可参考我的文章:

- Mediapipe - Windows10 编译Mediapipe C++版本保姆级教程 - StubbornHuang Blog

- Mediapipe - Windows10 编译Mediapipe C++版本保姆级教程_HW140701的博客-CSDN博客_mediapipecpp源代码

进行Mediapipe Windows C++环境编译。

如果能跑通上述的编译流程,则说明整个编译环境搭建没有问题,可以阅读下面的步骤了。

3 Mediapipe handtracking功能封装dll相关代码以及编译文件以及测试程序在Github上开源:

- GitHub - HW140701/GoogleMediapipePackageDll: package google mediapipe hand and holistic tracking into a dynamic link library

欢迎大家star!

3.1 dll接口设计dll接口设计如下:

/*

@brief 回调手势坐标点回调函数

@param[out] image_index 视频帧索引

@param[out] infos 存储坐标点数据的一维数组

@param[out] count 数组长度,坐标点数量

*/

typedef void(*LandmarksCallBack)(int image_index, PoseInfo* infos, int count);

/*

@brief 回调手势识别结果回调函数

@param[out] image_index 视频帧索引

@param[out] recogn_result 存储手势识别结果的一维数组

@param[out] count 数组长度,识别结果数量

*/

typedef void(*GestureResultCallBack)(int image_index, int* recogn_result, int count);

/*

@brief 初始化Google Mediapipe

@param[in] model_path 需要加载的模型路径

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Init(const char* model_path);

/*

@brief 注册回调手势坐标点的回调函数

@param func 回调函数指针

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Reigeter_Landmarks_Callback(LandmarksCallBack func);

/*

@brief 注册手势识别结果的回调函数

@param func 回调函数指针

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Register_Gesture_Result_Callback(GestureResultCallBack func);

/*

@brief 检测视频帧

@param[in] image_index 视频帧索引号

@param[in] image_width 视频帧宽度

@param[in] image_height 视频帧高度

@param[in] image_data 视频帧数据

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Frame(int image_index, int image_width, int image_height, void* image_data);

/*

@brief 检测视频

@param[in] video_path 视频路径

@param[in] show_image 是否显示结果视频

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Video(const char* video_path, int show_image);

/*

@brief Google Mediapipe释放

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Release();

C++

Copy

3.2 dll接口调用流程首先通过Mediapipe_Hand_Tracking_Init函数传递模型路径初始化Google Mediapipe Graph,然后注册手势识别坐标点回调函数或者手势识别结果坐标点函数,之后可以选择使用 Mediapipe_Hand_Tracking_Detect_Frame函数检测视频帧或者Mediapipe_Hand_Tracking_Detect_Video检测视频,并通过上一步注册的回调函数获取结果,在所有视频帧处理完成之后或者该视频处理完成之后通过Mediapipe_Hand_Tracking_Release函数释放内存。

3.3 代码实现 3.3.1 Mediapipe 手部跟踪与识别类hand_tracking_detect.h

#ifndef HAND_TRACKING_DETECT_H

#define HAND_TRACKING_DETECT_H

#include

#include "absl/flags/flag.h"

#include "absl/flags/parse.h"

#include "mediapipe/framework/calculator_framework.h"

#include "mediapipe/framework/formats/image_frame.h"

#include "mediapipe/framework/formats/image_frame_opencv.h"

#include "mediapipe/framework/port/file_helpers.h"

#include "mediapipe/framework/port/opencv_highgui_inc.h"

#include "mediapipe/framework/port/opencv_imgproc_inc.h"

#include "mediapipe/framework/port/opencv_video_inc.h"

#include "mediapipe/framework/port/parse_text_proto.h"

#include "mediapipe/framework/port/status.h"

#include "mediapipe/framework/formats/detection.pb.h"

#include "mediapipe/framework/formats/landmark.pb.h"

#include "mediapipe/framework/formats/rect.pb.h"

#include "hand_tracking_data.h"

namespace GoogleMediapipeHandTrackingDetect {

typedef void(*LandmarksCallBack)(int image_index,PoseInfo* infos, int count);

typedef void(*GestureResultCallBack)(int image_index, int* recogn_result, int count);

class HandTrackingDetect

{

public:

HandTrackingDetect();

virtual ~HandTrackingDetect();

public:

int InitGraph(const char* model_path);

int RegisterLandmarksCallback(LandmarksCallBack func);

int RegisterGestureResultCallBack(GestureResultCallBack func);

int DetectFrame(int image_index, int image_width, int image_height, void* image_data);

int DetectVideo(const char* video_path, int show_image);

int Release();

private:

absl::Status Mediapipe_InitGraph(const char* model_path);

absl::Status Mediapipe_RunMPPGraph(int image_index, int image_width, int image_height, void* image_data);

absl::Status Mediapipe_RunMPPGraph(const char* video_path, int show_image);

absl::Status Mediapipe_ReleaseGraph();

private:

bool m_bIsInit;

bool m_bIsRelease;

const char* m_kInputStream;

const char* m_kOutputStream;

const char* m_kWindowName;

const char* m_kOutputLandmarks;

LandmarksCallBack m_LandmarksCallBackFunc;

GestureResultCallBack m_GestureResultCallBackFunc;

mediapipe::CalculatorGraph m_Graph;

std::unique_ptr m_pPoller;

std::unique_ptr m_pPoller_landmarks;

};

}

#endif // HAND_TRACKING_DETECT_H

C++

Copy

hand_tracking_detect.cpp

#include

#include "hand_tracking_detect.h"

#include "hand_gesture_recognition.h"

GoogleMediapipeHandTrackingDetect::HandTrackingDetect::HandTrackingDetect()

{

m_bIsInit = false;

m_bIsRelease = false;

m_kInputStream = "input_video";

m_kOutputStream = "output_video";

m_kWindowName = "MediaPipe";

m_kOutputLandmarks = "landmarks";

m_LandmarksCallBackFunc = nullptr;

m_GestureResultCallBackFunc = nullptr;

}

GoogleMediapipeHandTrackingDetect::HandTrackingDetect::~HandTrackingDetect()

{

if (!m_bIsRelease)

{

Release();

}

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::InitGraph(const char* model_path)

{

absl::Status run_status = Mediapipe_InitGraph(model_path);

if (!run_status.ok())

{

return 0;

}

m_bIsInit = true;

return 1;

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::RegisterLandmarksCallback(LandmarksCallBack func)

{

if (func != nullptr)

{

m_LandmarksCallBackFunc = func;

return 1;

}

return 0;

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::RegisterGestureResultCallBack(GestureResultCallBack func)

{

if (func != nullptr)

{

m_GestureResultCallBackFunc = func;

return 1;

}

return 0;

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::DetectFrame(int image_index, int image_width, int image_height, void* image_data)

{

if (!m_bIsInit)

return 0;

absl::Status run_status = Mediapipe_RunMPPGraph(image_index,image_width,image_height,image_data);

if (!run_status.ok()) {

return 0;

}

return 1;

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::DetectVideo(const char* video_path, int show_image)

{

if (!m_bIsInit)

return 0;

absl::Status run_status = Mediapipe_RunMPPGraph(video_path, show_image);

if (!run_status.ok()) {

return 0;

}

return 1;

}

int GoogleMediapipeHandTrackingDetect::HandTrackingDetect::Release()

{

absl::Status run_status = Mediapipe_ReleaseGraph();

if (!run_status.ok()) {

return 0;

}

m_bIsRelease = true;

return 1;

}

absl::Status GoogleMediapipeHandTrackingDetect::HandTrackingDetect::Mediapipe_InitGraph(const char* model_path)

{

std::string calculator_graph_config_contents;

MP_RETURN_IF_ERROR(mediapipe::file::GetContents(model_path, &calculator_graph_config_contents));

mediapipe::CalculatorGraphConfig config =

mediapipe::ParseTextProtoOrDie(

calculator_graph_config_contents);

MP_RETURN_IF_ERROR(m_Graph.Initialize(config));

// 添加video输出流

auto sop = m_Graph.AddOutputStreamPoller(m_kOutputStream);

assert(sop.ok());

m_pPoller = std::make_unique(std::move(sop.value()));

// 添加landmarks输出流

mediapipe::StatusOrPoller sop_landmark = m_Graph.AddOutputStreamPoller(m_kOutputLandmarks);

assert(sop_landmark.ok());

m_pPoller_landmarks = std::make_unique(std::move(sop_landmark.value()));

MP_RETURN_IF_ERROR(m_Graph.StartRun({}));

return absl::OkStatus();

}

absl::Status GoogleMediapipeHandTrackingDetect::HandTrackingDetect::Mediapipe_RunMPPGraph(int image_index, int image_width, int image_height, void* image_data)

{

// 构造cv::Mat对象

cv::Mat camera_frame(cv::Size(image_width, image_height), CV_8UC3,(uchar*)image_data);

cv::cvtColor(camera_frame, camera_frame, cv::COLOR_BGR2RGB);

cv::flip(camera_frame, camera_frame, /*flipcode=HORIZONTAL*/ 1);

//std::cout camera_frame_raw;

if (camera_frame_raw.empty())

break;

cv::Mat camera_frame;

cv::cvtColor(camera_frame_raw, camera_frame, cv::COLOR_BGR2RGB);

cv::flip(camera_frame, camera_frame, /*flipcode=HORIZONTAL*/ 1);

// Wrap Mat into an ImageFrame.

auto input_frame = absl::make_unique(

mediapipe::ImageFormat::SRGB, camera_frame.cols, camera_frame.rows,

mediapipe::ImageFrame::kDefaultAlignmentBoundary);

cv::Mat input_frame_mat = mediapipe::formats::MatView(input_frame.get());

camera_frame.copyTo(input_frame_mat);

// Send image packet into the graph.

size_t frame_timestamp_us =

(double)cv::getTickCount() / (double)cv::getTickFrequency() * 1e6;

MP_RETURN_IF_ERROR(m_Graph.AddPacketToInputStream(

m_kInputStream, mediapipe::Adopt(input_frame.release())

.At(mediapipe::Timestamp(frame_timestamp_us))));

// Get the graph result packet, or stop if that fails.

mediapipe::Packet packet;

mediapipe::Packet packet_landmarks;

if (!m_pPoller->Next(&packet)) break;

if (m_pPoller_landmarks->QueueSize() > 0) {

if (m_pPoller_landmarks->Next(&packet_landmarks))

{

std::vector output_landmarks = packet_landmarks.Get();

int* hand_gesture_recognition_result = new int[output_landmarks.size()];

std::vector hand_landmarks;

hand_landmarks.clear();

for (int m = 0; m < output_landmarks.size(); ++m)

{

mediapipe::NormalizedLandmarkList single_hand_NormalizedLandmarkList = output_landmarks[m];

std::vector singleHandGestureInfo;

singleHandGestureInfo.clear();

for (int i = 0; i < single_hand_NormalizedLandmarkList.landmark_size(); ++i)

{

PoseInfo info;

const mediapipe::NormalizedLandmark landmark = single_hand_NormalizedLandmarkList.landmark(i);

info.x = landmark.x() * camera_frame.cols;

info.y = landmark.y() * camera_frame.rows;

singleHandGestureInfo.push_back(info);

hand_landmarks.push_back(info);

}

HandGestureRecognition handGestureRecognition;

int result = handGestureRecognition.GestureRecognition(singleHandGestureInfo);

hand_gesture_recognition_result[m] = result;

}

// 回调坐标点

if (m_GestureResultCallBackFunc)

{

PoseInfo* hand_landmarks_pose_infos = new PoseInfo[hand_landmarks.size()];

for (int i = 0; i < hand_landmarks.size(); ++i)

{

hand_landmarks_pose_infos[i].x = hand_landmarks[i].x;

hand_landmarks_pose_infos[i].y = hand_landmarks[i].y;

}

if (m_LandmarksCallBackFunc)

{

m_LandmarksCallBackFunc(image_index, hand_landmarks_pose_infos, hand_landmarks.size());

}

delete[] hand_landmarks_pose_infos;

}

// 回调识别结果

if (m_GestureResultCallBackFunc)

{

m_GestureResultCallBackFunc(image_index, hand_gesture_recognition_result, output_landmarks.size());

}

delete[] hand_gesture_recognition_result;

}

}

if (show_image)

{

auto& output_frame = packet.Get();

// Convert back to opencv for display or saving.

cv::Mat output_frame_mat = mediapipe::formats::MatView(&output_frame);

cv::cvtColor(output_frame_mat, output_frame_mat, cv::COLOR_RGB2BGR);

cv::Mat dst;

//cv::resize(output_frame_mat, dst, cv::Size(output_frame_mat.cols / 2, output_frame_mat.rows / 2));

cv::imshow(m_kWindowName, dst);

cv::waitKey(1);

}

image_index += 1;

}

if (show_image)

capture.release();

cv::destroyWindow(m_kWindowName);

return absl::OkStatus();

}

absl::Status GoogleMediapipeHandTrackingDetect::HandTrackingDetect::Mediapipe_ReleaseGraph()

{

MP_RETURN_IF_ERROR(m_Graph.CloseInputStream(m_kInputStream));

return m_Graph.WaitUntilDone();

}

C++

Copy

3.3.2 手势识别类hand_gesture_recognition.h

#ifndef HAND_GESTURE_RECOGNITION_H

#define HAND_GESTURE_RECOGNITION_H

#include "hand_tracking_data.h"

#include

namespace GoogleMediapipeHandTrackingDetect {

class HandGestureRecognition

{

public:

HandGestureRecognition();

virtual~HandGestureRecognition();

public:

int GestureRecognition(const std::vector& single_hand_joint_vector);

private:

float Vector2DAngle(const Vector2D& vec1, const Vector2D& vec2);

};

}

#endif // !HAND_GESTURE_RECOGNITION_H

C++

Copy

hand_gesture_recognition.cpp

#include "hand_gesture_recognition.h"

#include

#include

GoogleMediapipeHandTrackingDetect::HandGestureRecognition::HandGestureRecognition()

{

}

GoogleMediapipeHandTrackingDetect::HandGestureRecognition::~HandGestureRecognition()

{

}

int GoogleMediapipeHandTrackingDetect::HandGestureRecognition::GestureRecognition(const std::vector& single_hand_joint_vector)

{

if (single_hand_joint_vector.size() != 21)

return -1;

// 大拇指角度

Vector2D thumb_vec1;

thumb_vec1.x = single_hand_joint_vector[0].x - single_hand_joint_vector[2].x;

thumb_vec1.y = single_hand_joint_vector[0].y - single_hand_joint_vector[2].y;

Vector2D thumb_vec2;

thumb_vec2.x = single_hand_joint_vector[3].x - single_hand_joint_vector[4].x;

thumb_vec2.y = single_hand_joint_vector[3].y - single_hand_joint_vector[4].y;

float thumb_angle = Vector2DAngle(thumb_vec1, thumb_vec2);

//std::cout angle_threshold) && (pink_angle < angle_threshold))

result = Gesture::Six;

else if ((thumb_angle < thumb_angle_threshold) && (index_angle > angle_threshold) && (middle_angle > angle_threshold) && (ring_angle > angle_threshold) && (pink_angle > angle_threshold))

result = Gesture::ThumbUp;

else if ((thumb_angle > 5) && (index_angle > angle_threshold) && (middle_angle < angle_threshold) && (ring_angle < angle_threshold) && (pink_angle < angle_threshold))

result = Gesture::Ok;

else

result = -1;

return result;

}

float GoogleMediapipeHandTrackingDetect::HandGestureRecognition::Vector2DAngle(const Vector2D& vec1, const Vector2D& vec2)

{

double PI = 3.141592653;

float t = (vec1.x * vec2.x + vec1.y * vec2.y) / (sqrt(pow(vec1.x, 2) + pow(vec1.y, 2)) * sqrt(pow(vec2.x, 2) + pow(vec2.y, 2)));

float angle = acos(t) * (180 / PI);

return angle;

}

C++

Copy

3.3.3 动态链接库接口导出类hand_tracking_api.h

#ifndef HAND_TRACKING_API_H

#define HAND_TRACKING_API_H

#define EXPORT

/* 定义动态链接库dll的导出 */

#include

#ifdef _WIN32

#ifdef EXPORT

#define EXPORT_API __declspec(dllexport)

#else

#define EXPORT_API __declspec(dllimport)

#endif

#else

#include

#ifdef EXPORT

#define EXPORT_API __attribute__((visibility ("default")))

#else

#endif

#endif

struct PoseInfo;

/*

@brief 回调手势坐标点回调函数

@param[out] image_index 视频帧索引

@param[out] infos 存储坐标点数据的一维数组

@param[out] count 数组长度,坐标点数量

*/

typedef void(*LandmarksCallBack)(int image_index, PoseInfo* infos, int count);

/*

@brief 回调手势识别结果回调函数

@param[out] image_index 视频帧索引

@param[out] recogn_result 存储手势识别结果的一维数组

@param[out] count 数组长度,识别结果数量

*/

typedef void(*GestureResultCallBack)(int image_index, int* recogn_result, int count);

#ifdef __cplusplus

extern "C" {

#endif

#ifndef EXPORT_API

#define EXPORT_API

#endif

/*

@brief 初始化Google Mediapipe

@param[in] model_path 需要加载的模型路径

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Init(const char* model_path);

/*

@brief 注册回调手势坐标点的回调函数

@param func 回调函数指针

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Reigeter_Landmarks_Callback(LandmarksCallBack func);

/*

@brief 注册手势识别结果的回调函数

@param func 回调函数指针

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Register_Gesture_Result_Callback(GestureResultCallBack func);

/*

@brief 检测视频帧

@param[in] image_index 视频帧索引号

@param[in] image_width 视频帧宽度

@param[in] image_height 视频帧高度

@param[in] image_data 视频帧数据

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Frame(int image_index, int image_width, int image_height, void* image_data);

/*

@brief 检测视频

@param[in] video_path 视频路径

@param[in] show_image 是否显示结果视频

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Video(const char* video_path, int show_image);

/*

@brief Google Mediapipe释放

@return 返回操作成功或者失败

0 失败

1 成功

*/

EXPORT_API int Mediapipe_Hand_Tracking_Release();

#ifdef __cplusplus

}

#endif

#endif // !HAND_TRACKING_API_H

C++

Copy

hand_tracking_api.cpp

#include "hand_tracking_api.h"

#include "hand_tracking_detect.h"

using namespace GoogleMediapipeHandTrackingDetect;

HandTrackingDetect m_HandTrackingDetect;

EXPORT_API int Mediapipe_Hand_Tracking_Init(const char* model_path)

{

return m_HandTrackingDetect.InitGraph(model_path);

}

EXPORT_API int Mediapipe_Hand_Tracking_Reigeter_Landmarks_Callback(LandmarksCallBack func)

{

return m_HandTrackingDetect.RegisterLandmarksCallback(func);

}

EXPORT_API int Mediapipe_Hand_Tracking_Register_Gesture_Result_Callback(GestureResultCallBack func)

{

return m_HandTrackingDetect.RegisterGestureResultCallBack(func);

}

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Frame(int image_index, int image_width, int image_height, void* image_data)

{

return m_HandTrackingDetect.DetectFrame(image_index, image_width, image_height, image_data);

}

EXPORT_API int Mediapipe_Hand_Tracking_Detect_Video(const char* video_path, int show_image)

{

return m_HandTrackingDetect.DetectVideo(video_path, show_image);

}

EXPORT_API int Mediapipe_Hand_Tracking_Release()

{

return m_HandTrackingDetect.Release();

}

C++

Copy

3.3.4 数据声明头文件hand_tracking_data.h

#ifndef HAND_TRACKING_DATA_H

#define HAND_TRACKING_DATA_H

struct PoseInfo {

float x;

float y;

};

typedef PoseInfo Point2D;

typedef PoseInfo Vector2D;

enum Gesture

{

NoGesture = -1,

One = 1,

Two = 2,

Three = 3,

Four = 4,

Five = 5,

Six = 6,

ThumbUp = 7,

Ok = 8,

Fist = 9

};

#endif // !HAND_TRACKING_DATA_H

C++

Copy

4 使用bazel在Mediapipe编译dll 4.1 源文件项目组织和动态链接库的Bazel编译文件的编写在Mediapipe仓库目录mediapipe\mediapipe\examples\desktop下新建一个新的文件夹,命名为hand_tracking_test

将上述的hand_tracking_detect.h、hand_tracking_detect.cpp、hand_gesture_recognition.h、hand_gesture_recognition.cpp、hand_tracking_api.h、hand_tracking_api.cpp、hand_tracking_data.h文件拷贝到新建的hand_tracking_test文件夹下

从Mediapipe的原始仓库中拷贝mediapipe\mediapipe\examples\desktop\hand_tracking文件夹下的BUILD文件到新建的目录mediapipe\mediapipe\examples\desktop\hand_tracking_test下,

并将该文件原始内容

cc_binary(

name = "hand_tracking_cpu",

deps = [

"//mediapipe/examples/desktop:demo_run_graph_main",

"//mediapipe/graphs/hand_tracking:desktop_tflite_calculators",

],

)

C++

Copy

修改为:

cc_binary(

name = "Mediapipe_Hand_Tracking",

srcs = ["hand_tracking_api.h","hand_tracking_api.cpp","hand_tracking_detect.h","hand_tracking_detect.cpp","hand_tracking_data.h","hand_gesture_recognition.h","hand_gesture_recognition.cpp"],

linkshared=True,

deps = [

"//mediapipe/graphs/hand_tracking:desktop_tflite_calculators",

],

)

C++

Copy

其中:

- name = "Mediapipe_Hand_Tracking" 表示该动态链接库的名字为Mediapipe_Hand_Tracking

- srcs 表示该动态链接库包含以下头文件和源文件

- linkshared=True 表示将该项目编译为动态链接库

- deps 表示该动态链接库所依赖的Mediapipe的项目文件

修改后的整体的BUILD文件内容为:

# Copyright 2019 The MediaPipe Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

licenses(["notice"])

package(default_visibility = ["//mediapipe/examples:__subpackages__"])

cc_binary(

name = "hand_tracking_tflite",

deps = [

"//mediapipe/examples/desktop:simple_run_graph_main",

"//mediapipe/graphs/hand_tracking:desktop_tflite_calculators",

],

)

cc_binary(

name = "Mediapipe_Hand_Tracking",

srcs = ["hand_tracking_api.h","hand_tracking_api.cpp","hand_tracking_detect.h","hand_tracking_detect.cpp","hand_tracking_data.h","hand_gesture_recognition.h","hand_gesture_recognition.cpp"],

linkshared=True,

deps = [

"//mediapipe/graphs/hand_tracking:desktop_tflite_calculators",

],

)

# Linux only

cc_binary(

name = "hand_tracking_gpu",

deps = [

"//mediapipe/examples/desktop:demo_run_graph_main_gpu",

"//mediapipe/graphs/hand_tracking:mobile_calculators",

],

)

C++

Copy

4.2 动态链接库编译在cmd.exe中切换到Mediapipe的目录下,输入以下命令使用bazel编译动态链接库

示例命令:

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="D:\\Anaconda\\python.exe" mediapipe/examples/desktop/hand_tracking_test:Mediapipe_Hand_Tracking --verbose_failures

C++

Copy

python环境目录以及具体的编译目录根据自己的目录修改

如果一切顺利,将会在mediapipe\bazel-bin\mediapipe\examples\desktop\hand_tracking_test目录下生成Mediapipe_Hand_Tracking.dll文件。

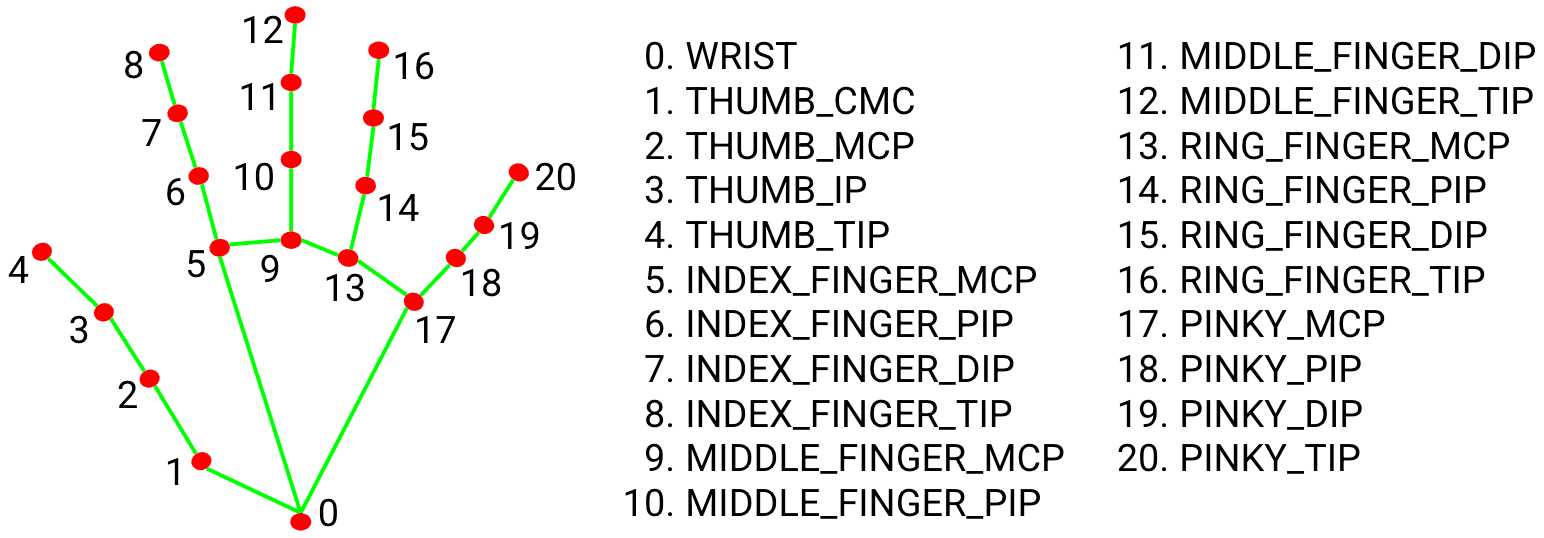

5 Mediapipe 手部标记点可参考Mediapipe仓库下mediapipe\docs\images\mobile\hand_landmarks.png

手势设置:

enum Gesture

{

NoGesture = -1,

One = 1,

Two = 2,

Three = 3,

Four = 4,

Five = 5,

Six = 6,

ThumbUp = 7,

Ok = 8,

Fist = 9

};

C++

Copy

6 动态链接库的使用下面从检测视频帧和检测视频文件两种方式展示如何使用上述动态链接库。

这里需要注意的是,在程序运行时需要加载Mediapipe原仓库中的Tensowflow lite模型,所以需要将mediapipe\mediapipe\modules\hand_landmark下的模型放置在程序运行的同级目录下,并依然保持mediapipe\mediapipe\modules\hand_landmark目录层次,详情见Github项目。

6.1 检测视频帧#include

#include

#include

#include

#include "DynamicModuleLoader.h"

using namespace cv;

using namespace std;

using namespace DynamicModuleLoaderSpace;

struct PoseInfo {

float x;

float y;

};

typedef void(*LandmarksCallBack)(int image_index, PoseInfo* infos, int count);

typedef void(*GestureResultCallBack)(int image_index, int* recogn_result, int count);

typedef int (*Func_Mediapipe_Hand_Tracking_Init)(const char* model_path);

typedef int (*Func_Mediapipe_Hand_Tracking_Reigeter_Landmarks_Callback)(LandmarksCallBack func);

typedef int (*Func_Mediapipe_Hand_Tracking_Register_Gesture_Result_Callback)(GestureResultCallBack func);

typedef int (*Func_Mediapipe_Hand_Tracking_Detect_Frame)(int image_index, int image_width, int image_height, void* image_data);

typedef int (*Func_Mediapipe_Hand_Tracking_Detect_Video)(const char* video_path, int show_image);

typedef int (*Func_Mediapipe_Hand_Tracking_Release)();

std::string GetGestureResult(int result)

{

std::string result_str = "未知手势";

switch (result)

{

case 1:

result_str = "One";

break;

case 2:

result_str = "Two";

break;

case 3:

result_str = "Three";

break;

case 4:

result_str = "Four";

break;

case 5:

result_str = "Five";

break;

case 6:

result_str = "Six";

break;

case 7:

result_str = "ThumbUp";

break;

case 8:

result_str = "Ok";

break;

case 9:

result_str = "Fist";

break;

default:

break;

}

return result_str;

}

void LandmarksCallBackImpl(int image_index, PoseInfo* infos, int count)

{

std::cout

关注

打赏

最近更新

- 深拷贝和浅拷贝的区别(重点)

- 【Vue】走进Vue框架世界

- 【云服务器】项目部署—搭建网站—vue电商后台管理系统

- 【React介绍】 一文带你深入React

- 【React】React组件实例的三大属性之state,props,refs(你学废了吗)

- 【脚手架VueCLI】从零开始,创建一个VUE项目

- 【React】深入理解React组件生命周期----图文详解(含代码)

- 【React】DOM的Diffing算法是什么?以及DOM中key的作用----经典面试题

- 【React】1_使用React脚手架创建项目步骤--------详解(含项目结构说明)

- 【React】2_如何使用react脚手架写一个简单的页面?