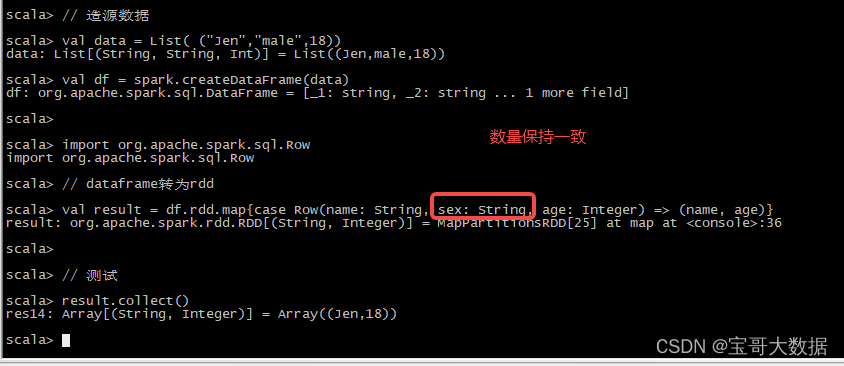

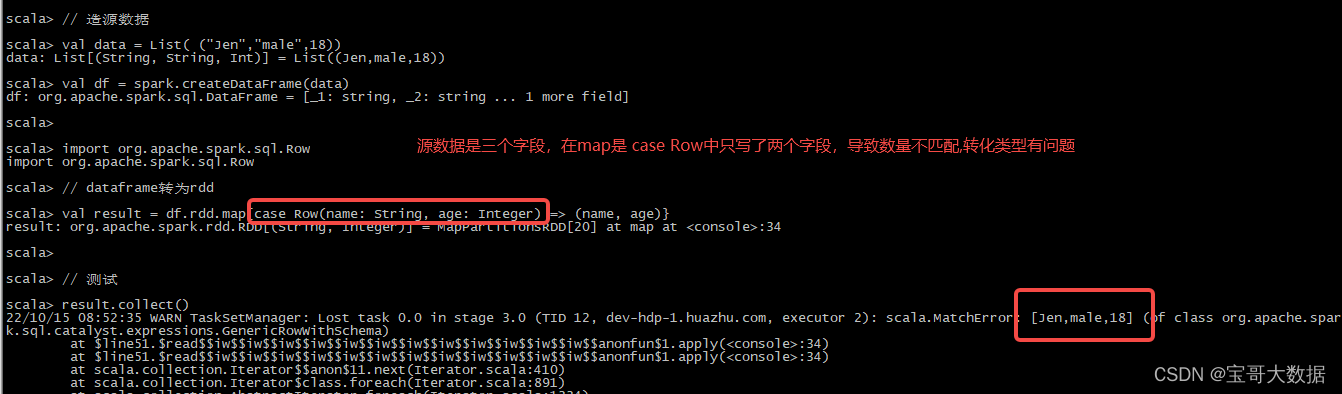

在dataframe转为rdd

// 造源数据

val data = List( ("Jen","male",18))

val df = spark.createDataFrame(data)

import org.apache.spark.sql.Row

// dataframe转为rdd

val result = df.rdd.map{case Row(name: String, age: Integer) => (name, age)}

result.collect()

报错

Caused by: scala.MatchError: [Jen,male,18] (of class org.apache.spark.sql.catalyst.expressions.GenericRowWithSchema)

问题原因: 字段数量不一致,导致转化类型错误

改正: