- 01 引言

- 02 配置详解

- 2.1 bin 配置

- 2.1.1 dolphinscheduler-daemon.sh

- 2.1.2 dolphinscheduler_env.sh

- 2.1.3 install_env.sh

- 2.1.4 install.sh

- 2.1.4.1 remove-zk-node.sh

- 2.1.4.2 scp-hosts.sh

- 2.1.5 start-all.sh/status-all.sh/stop-all.sh

- 2.2 master-server 配置

- 2.2.1 start.sh

- 2.2.2 application.yaml

- 2.2.2.1 master 核心配置解析

- 2.2.2.2 worker 核心配置解析

- 2.2.2.3 alert 核心配置解析

- 2.2.2.4 quartz 核心配置解析

- 2.3 ui 配置

- 3. 文末

在前面的博客,大致讲解了DolphinScheduler相关的概念,有兴趣的童鞋可以参阅下:

- 《DolphinScheduler教程(01)- 入门》

- 《DolphinScheduler教程(02)- 系统架构设计》

- 《DolphinScheduler教程(03)- 源码分析》

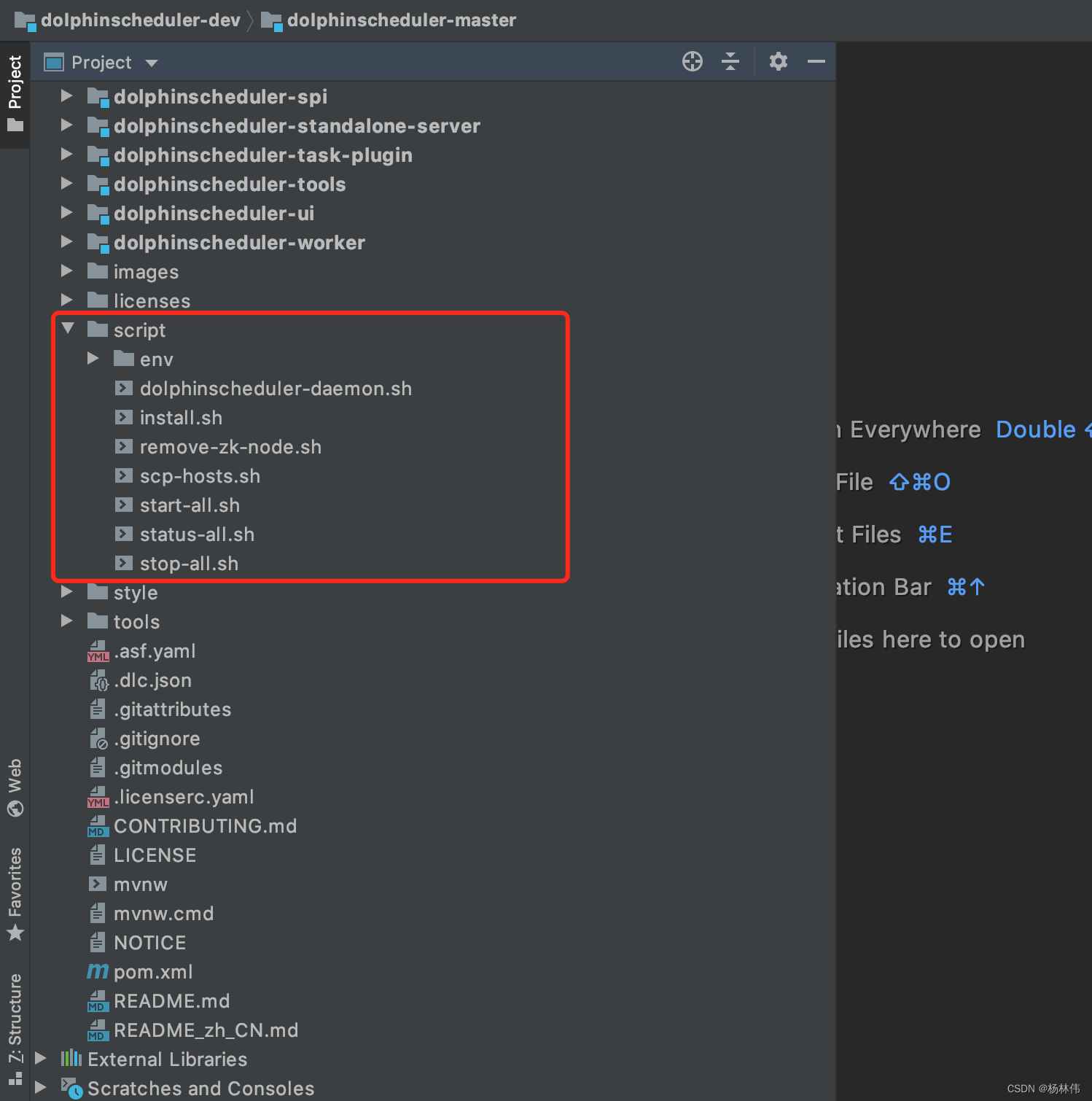

在前面的章节,我们知道DolphinScheduler目录结构如下:  转换为树形结构如下:

转换为树形结构如下:

├── LICENSE

│----------------------------------------------------------------------

├── NOTICE

│----------------------------------------------------------------------

├── licenses

│----------------------------------------------------------------------

├── bin

│ ├── dolphinscheduler-daemon.sh

│ ├── env

│ │ ├── dolphinscheduler_env.sh

│ │ └── install_env.sh

│ ├── install.sh

│ ├── remove-zk-node.sh

│ ├── scp-hosts.sh

│ ├── start-all.sh

│ ├── status-all.sh

│ └── stop-all.sh

│----------------------------------------------------------------------

├── alert-server

│ ├── bin

│ │ └── start.sh

│ ├── conf

│ │ ├── application.yaml

│ │ ├── common.properties

│ │ ├── dolphinscheduler_env.sh

│ │ └── logback-spring.xml

│ └── libs

│----------------------------------------------------------------------

├── api-server

│ ├── bin

│ │ └── start.sh

│ ├── conf

│ │ ├── application.yaml

│ │ ├── common.properties

│ │ ├── dolphinscheduler_env.sh

│ │ └── logback-spring.xml

│ ├── libs

│ └── ui

│----------------------------------------------------------------------

├── master-server

│ ├── bin

│ │ └── start.sh

│ ├── conf

│ │ ├── application.yaml

│ │ ├── common.properties

│ │ ├── dolphinscheduler_env.sh

│ │ └── logback-spring.xml

│ └── libs

│----------------------------------------------------------------------

├── standalone-server

│ ├── bin

│ │ └── start.sh

│ ├── conf

│ │ ├── application.yaml

│ │ ├── common.properties

│ │ ├── dolphinscheduler_env.sh

│ │ ├── logback-spring.xml

│ │ └── sql

│ ├── libs

│ └── ui

│----------------------------------------------------------------------

├── tools

│ ├── bin

│ │ └── upgrade-schema.sh

│ ├── conf

│ │ ├── application.yaml

│ │ └── common.properties

│ ├── libs

│ └── sql

│----------------------------------------------------------------------

├── worker-server

│ ├── bin

│ │ └── start.sh

│ ├── conf

│ │ ├── application.yaml

│ │ ├── common.properties

│ │ ├── dolphinscheduler_env.sh

│ │ └── logback-spring.xml

│ └── libs

│----------------------------------------------------------------------

└── ui

接下来分别讲解下。

2.1 bin 配置bin目录是DolphinScheduler命令和环境变量配置存放目录 ,配置与解析如下:

dolphinscheduler-daemon.sh脚本起停服务时,运行此脚本加载环境变量配置文件 [如:JAVA_HOME,HADOOP_HOME, HIVE_HOME …][_________install_env.sh当使用install.sh start-all.sh stop-all.sh status-all.sh脚本时,运行此脚本为DolphinScheduler安装加载环境变量配置install.sh-当使用集群模式或伪集群模式部署DolphinScheduler时,运行此脚本自动安装服务remove-zk-node.sh-清理zookeeper缓存文件脚本scp-hosts.sh-安装文件传输脚本start-all.sh-当使用集群模式或伪集群模式部署DolphinScheduler时,运行此脚本启动所有服务status-all.sh-当使用集群模式或伪集群模式部署DolphinScheduler时,运行此脚本获取所有服务状态stop-all.sh-当使用集群模式或伪集群模式部署DolphinScheduler时,运行此脚本终止所有服务

2.1.1 dolphinscheduler-daemon.sh

作用:启动/关闭DolphinScheduler服务脚本

详细配置与解析如下:

## 用法描述

usage="Usage: dolphinscheduler-daemon.sh (start|stop|status) "

# 如果没有指定参数,提示用法

if [ $# -le 1 ]; then

echo $usage

exit 1

fi

startStop=$1

shift

command=$1

shift

# 开始执行指令

echo "Begin $startStop $command......"

# 定义bin目录以及bin涉及的环境文件

BIN_DIR=`dirname $0`

BIN_DIR=`cd "$BIN_DIR"; pwd`

DOLPHINSCHEDULER_HOME=`cd "$BIN_DIR/.."; pwd`

BIN_ENV_FILE="${DOLPHINSCHEDULER_HOME}/bin/env/dolphinscheduler_env.sh"

# 当存在 `bin/env/dolphinscheduler_env.sh`文件则执行覆盖

# 启动服务时,用户可以只修改`bin/env/dolphinscheduler_env.sh`来替代每个服务的dolphinscheduler_env.s

function overwrite_server_env() {

local server=$1

local server_env_file="${DOLPHINSCHEDULER_HOME}/${server}/conf/dolphinscheduler_env.sh"

if [ -f "${BIN_ENV_FILE}" ]; then

echo "Overwrite ${server}/conf/dolphinscheduler_env.sh using bin/env/dolphinscheduler_env.sh."

cp "${BIN_ENV_FILE}" "${server_env_file}"

else

echo "Start server ${server} using env config path ${server_env_file}, because file ${BIN_ENV_FILE} not exists."

fi

}

export HOSTNAME=`hostname`

export DOLPHINSCHEDULER_LOG_DIR=$DOLPHINSCHEDULER_HOME/$command/logs

export STOP_TIMEOUT=5

if [ ! -d "$DOLPHINSCHEDULER_LOG_DIR" ]; then

mkdir $DOLPHINSCHEDULER_LOG_DIR

fi

pid=$DOLPHINSCHEDULER_HOME/$command/pid

cd $DOLPHINSCHEDULER_HOME/$command

if [ "$command" = "api-server" ]; then

log=$DOLPHINSCHEDULER_HOME/api-server/logs/$command-$HOSTNAME.out

elif [ "$command" = "master-server" ]; then

log=$DOLPHINSCHEDULER_HOME/master-server/logs/$command-$HOSTNAME.out

elif [ "$command" = "worker-server" ]; then

log=$DOLPHINSCHEDULER_HOME/worker-server/logs/$command-$HOSTNAME.out

elif [ "$command" = "alert-server" ]; then

log=$DOLPHINSCHEDULER_HOME/alert-server/logs/$command-$HOSTNAME.out

elif [ "$command" = "standalone-server" ]; then

log=$DOLPHINSCHEDULER_HOME/standalone-server/logs/$command-$HOSTNAME.out

else

echo "Error: No command named '$command' was found."

exit 1

fi

# 执行启动、停止、状态命令

case $startStop in

(start)

echo starting $command, logging to $DOLPHINSCHEDULER_LOG_DIR

overwrite_server_env "${command}"

nohup /bin/bash "$DOLPHINSCHEDULER_HOME/$command/bin/start.sh" > $log 2>&1 &

echo $! > $pid

;;

(stop)

if [ -f $pid ]; then

TARGET_PID=`cat $pid`

if kill -0 $TARGET_PID > /dev/null 2>&1; then

echo stopping $command

pkill -P $TARGET_PID

sleep $STOP_TIMEOUT

if kill -0 $TARGET_PID > /dev/null 2>&1; then

echo "$command did not stop gracefully after $STOP_TIMEOUT seconds: killing with kill -9"

pkill -P -9 $TARGET_PID

fi

else

echo no $command to stop

fi

rm -f $pid

else

echo no $command to stop

fi

;;

(status)

# more details about the status can be added later

serverCount=`ps -ef | grep "$DOLPHINSCHEDULER_HOME" | grep "$CLASS" | grep -v "grep" | wc -l`

state="STOP"

# font color - red

state="[ \033[1;31m $state \033[0m ]"

if [[ $serverCount -gt 0 ]];then

state="RUNNING"

# font color - green

state="[ \033[1;32m $state \033[0m ]"

fi

echo -e "$command $state"

;;

(*)

echo $usage

exit 1

;;

esac

echo "End $startStop $command."

作用:当使用dolphinscheduler-daemon.sh脚本起停服务时,运行此脚本加载环境变量配置文件 [如:JAVA_HOME,HADOOP_HOME, HIVE_HOME …]

详细配置与解析如下:

# 将使用该指定的JAVA_HOME来启动DolphinScheduler服务

export JAVA_HOME=${JAVA_HOME:-/opt/soft/java}

# 数据库相关的配置,设置数据库类型、用户名和密码

export DATABASE=${DATABASE:-postgresql}

export SPRING_PROFILES_ACTIVE=${DATABASE}

export SPRING_DATASOURCE_URL

export SPRING_DATASOURCE_USERNAME

export SPRING_DATASOURCE_PASSWORD

# DolphinScheduler服务相关的配置

export SPRING_CACHE_TYPE=${SPRING_CACHE_TYPE:-none}

export SPRING_JACKSON_TIME_ZONE=${SPRING_JACKSON_TIME_ZONE:-UTC}

export MASTER_FETCH_COMMAND_NUM=${MASTER_FETCH_COMMAND_NUM:-10}

# 注册中心配置,指定注册中心的类型以及连接地址相关的信息

export REGISTRY_TYPE=${REGISTRY_TYPE:-zookeeper}

export REGISTRY_ZOOKEEPER_CONNECT_STRING=${REGISTRY_ZOOKEEPER_CONNECT_STRING:-localhost:2181}

# 工作流任务相关的配置,根据任务的实际需求去修改相应的配置

export HADOOP_HOME=${HADOOP_HOME:-/opt/soft/hadoop}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/soft/hadoop/etc/hadoop}

export SPARK_HOME1=${SPARK_HOME1:-/opt/soft/spark1}

export SPARK_HOME2=${SPARK_HOME2:-/opt/soft/spark2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/soft/python}

export HIVE_HOME=${HIVE_HOME:-/opt/soft/hive}

export FLINK_HOME=${FLINK_HOME:-/opt/soft/flink}

export DATAX_HOME=${DATAX_HOME:-/opt/soft/datax}

# 导出上述配置的bin目录

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$FLINK_HOME/bin:$DATAX_HOME/bin:$PATH

作用:当使用install.sh start-all.sh stop-all.sh status-all.sh脚本时,运行此脚本为DolphinScheduler安装加载环境变量配置 。

详细配置与解析如下:

# 安装DolphinScheduler的机器(包含 master、worker、api、alert)

# 如果以伪集群模式去发布,只要写伪集群模式的主机名即可

# 例如:

# - 主机名示例:ips="ds1,ds2,ds3,ds4,ds5"

# - IP示例:ips="192.168.8.1,192.168.8.2,192.168.8.3,192.168.8.4,192.168.8.5"

ips=${ips:-"ds1,ds2,ds3,ds4,ds5"}

# SSH协议的端口,默认22

sshPort=${sshPort:-"22"}

# Master服务器的主机名或ip地址(必须是ips的子集)

# 例如:

# - 主机名示例: masters="ds1,ds2"

# - Ip示例:masters="192.168.8.1,192.168.8.2"

masters=${masters:-"ds1,ds2"}

# Wrokers服务器的主机名或ip地址(必须是ips的子集)

# 例如:

# - 主机名示例:workers="ds1:default,ds2:default,ds3:default"

# - IP示例: workers="192.168.8.1:default,192.168.8.2:default,192.168.8.3:default"

workers=${workers:-"ds1:default,ds2:default,ds3:default,ds4:default,ds5:default"}

# AlertServer服务器的主机名或ip地址(必须是ips的子集)

# - 主机名示例:alertServer="ds3"

# - IP示例: alertServer="192.168.8.3"

alertServer=${alertServer:-"ds3"}

# APIServer(必须是ips的子集)

# - 主机名示例:alertServer="ds1"

# - IP示例: alertServer="192.168.8.1"

apiServers=${apiServers:-"ds1"}

# 此目录为上面配置的所有机器安装DolphinScheduler的目录,它会自动地被install.sh`创建

# 主要这个配置不要配置和当前路径一致,如果使用相对路径,不要添加引号

installPath=${installPath:-"/tmp/dolphinscheduler"}

# 用户将为上面配置的所有机器部署DolphinScheduler。

# 用户必须在运行“install.sh”脚本之前自己创建。

# 用户需要具有sudo权限和操作hdfs的权限。如果启用了hdfs,则需要该用户创建根目录

deployUser=${deployUser:-"dolphinscheduler"}

# zookeeper的根目录,现在DolphinScheduler默认注册服务器是zookeeper。

zkRoot=${zkRoot:-"/dolphinscheduler"}

作用: 当使用集群模式或伪集群模式部署DolphinScheduler时,运行此脚本自动安装服务 。

详细配置与解析如下:

workDir=`dirname $0`

workDir=`cd ${workDir};pwd`

source ${workDir}/env/install_env.sh

source ${workDir}/env/dolphinscheduler_env.sh

# 1.创建目录

echo "1.create directory"

# 如果安装路径等于“/”或相关路径为“/”或为空,将导致目录“/bin”被覆盖或文件添加,

# 所以我们应该检查它的值。在这里使用命令' realpath '来获得相关的路径,如果您的shell env 没有命令“realpath”。

if [ ! -d $installPath ];then

sudo mkdir -p $installPath

sudo chown -R $deployUser:$deployUser $installPath

elif [[ -z "${installPath// }" || "${installPath// }" == "/" || ( $(command -v realpath) && $(realpath -s "${installPath}") == "/" ) ]]; then

echo "Parameter installPath can not be empty, use in root path or related path of root path, currently use ${installPath}"

exit 1

fi

# 2. 复制资源

echo "2.scp resources"

bash ${workDir}/scp-hosts.sh

if [ $? -eq 0 ];then

echo 'scp copy completed'

else

echo 'scp copy failed to exit'

exit 1

fi

# 3. 停止服务

echo "3.stop server"

bash ${workDir}/stop-all.sh

# 4. 删除zk节点

echo "4.delete zk node"

bash ${workDir}/remove-zk-node.sh $zkRoot

# 5. 启动

echo "5.startup"

bash ${workDir}/start-all.sh

清理zookeeper缓存文件脚本 :

print_usage(){

printf $"USAGE:$0 rootNode\n"

exit 1

}

if [ $# -ne 1 ];then

print_usage

fi

rootNode=$1

BIN_DIR=`dirname $0`

BIN_DIR=`cd "$BIN_DIR"; pwd`

DOLPHINSCHEDULER_HOME=$BIN_DIR/..

source ${BIN_DIR}/env/install_env.sh

source ${BIN_DIR}/env/dolphinscheduler_env.sh

export JAVA_HOME=$JAVA_HOME

export DOLPHINSCHEDULER_CONF_DIR=$DOLPHINSCHEDULER_HOME/conf

export DOLPHINSCHEDULER_LIB_JARS=$DOLPHINSCHEDULER_HOME/api-server/libs/*

export DOLPHINSCHEDULER_OPTS="-Xmx1g -Xms1g -Xss512k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

export STOP_TIMEOUT=5

CLASS=org.apache.zookeeper.ZooKeeperMain

exec_command="$DOLPHINSCHEDULER_OPTS -classpath $DOLPHINSCHEDULER_CONF_DIR:$DOLPHINSCHEDULER_LIB_JARS $CLASS -server $REGISTRY_ZOOKEEPER_CONNECT_STRING rmr $rootNode"

cd $DOLPHINSCHEDULER_HOME

$JAVA_HOME/bin/java $exec_command

安装文件传输脚本:

workDir=`dirname $0`

workDir=`cd ${workDir};pwd`

source ${workDir}/env/install_env.sh

workersGroup=(${workers//,/ })

for workerGroup in ${workersGroup[@]}

do

echo $workerGroup;

worker=`echo $workerGroup|awk -F':' '{print $1}'`

group=`echo $workerGroup|awk -F':' '{print $2}'`

workerNames+=($worker)

groupNames+=(${group:-default})

done

hostsArr=(${ips//,/ })

for host in ${hostsArr[@]}

do

if ! ssh -p $sshPort $host test -e $installPath; then

ssh -p $sshPort $host "sudo mkdir -p $installPath; sudo chown -R $deployUser:$deployUser $installPath"

fi

echo "scp dirs to $host/$installPath starting"

for i in ${!workerNames[@]}; do

if [[ ${workerNames[$i]} == $host ]]; then

workerIndex=$i

break

fi

done

# set worker groups in application.yaml

[[ -n ${workerIndex} ]] && sed -i "s/- default/- ${groupNames[$workerIndex]}/" $workDir/../worker-server/conf/application.yaml

for dsDir in bin master-server worker-server alert-server api-server ui tools

do

echo "start to scp $dsDir to $host/$installPath"

# Use quiet mode to reduce command line output

scp -q -P $sshPort -r $workDir/../$dsDir $host:$installPath

done

# restore worker groups to default

[[ -n ${workerIndex} ]] && sed -i "s/- ${groupNames[$workerIndex]}/- default/" $workDir/../worker-server/conf/application.yaml

echo "scp dirs to $host/$installPath complete"

done

当使用集群模式或伪集群模式部署DolphinScheduler时,运行脚本启动/停止所有服务或获取所有服务状态:

########### start-all.sh ###########

workDir=`dirname $0`

workDir=`cd ${workDir};pwd`

source ${workDir}/env/install_env.sh

workersGroup=(${workers//,/ })

for workerGroup in ${workersGroup[@]}

do

echo $workerGroup;

worker=`echo $workerGroup|awk -F':' '{print $1}'`

workerNames+=($worker)

done

mastersHost=(${masters//,/ })

for master in ${mastersHost[@]}

do

echo "$master master server is starting"

ssh -o StrictHostKeyChecking=no -p $sshPort $master "cd $installPath/; bash bin/dolphinscheduler-daemon.sh start master-server;"

done

for worker in ${workerNames[@]}

do

echo "$worker worker server is starting"

ssh -o StrictHostKeyChecking=no -p $sshPort $worker "cd $installPath/; bash bin/dolphinscheduler-daemon.sh start worker-server;"

done

ssh -o StrictHostKeyChecking=no -p $sshPort $alertServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh start alert-server;"

apiServersHost=(${apiServers//,/ })

for apiServer in ${apiServersHost[@]}

do

echo "$apiServer api server is starting"

ssh -o StrictHostKeyChecking=no -p $sshPort $apiServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh start api-server;"

done

# query server status

echo "query server status"

cd $installPath/; bash bin/status-all.sh

########### stop-all.sh ###########

workDir=`dirname $0`

workDir=`cd ${workDir};pwd`

source ${workDir}/env/install_env.sh

workersGroup=(${workers//,/ })

for workerGroup in ${workersGroup[@]}

do

echo $workerGroup;

worker=`echo $workerGroup|awk -F':' '{print $1}'`

workerNames+=($worker)

done

mastersHost=(${masters//,/ })

for master in ${mastersHost[@]}

do

echo "$master master server is stopping"

ssh -o StrictHostKeyChecking=no -p $sshPort $master "cd $installPath/; bash bin/dolphinscheduler-daemon.sh stop master-server;"

done

for worker in ${workerNames[@]}

do

echo "$worker worker server is stopping"

ssh -o StrictHostKeyChecking=no -p $sshPort $worker "cd $installPath/; bash bin/dolphinscheduler-daemon.sh stop worker-server;"

done

ssh -o StrictHostKeyChecking=no -p $sshPort $alertServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh stop alert-server;"

apiServersHost=(${apiServers//,/ })

for apiServer in ${apiServersHost[@]}

do

echo "$apiServer api server is stopping"

ssh -o StrictHostKeyChecking=no -p $sshPort $apiServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh stop api-server;"

done

########### status-all.sh ###########

workDir=`dirname $0`

workDir=`cd ${workDir};pwd`

source ${workDir}/env/install_env.sh

# install_env.sh info

echo -e '\n'

echo "====================== dolphinscheduler server config ============================="

echo -e "1.dolphinscheduler server node config hosts:[ \033[1;32m ${ips} \033[0m ]"

echo -e "2.master server node config hosts:[ \033[1;32m ${masters} \033[0m ]"

echo -e "3.worker server node config hosts:[ \033[1;32m ${workers} \033[0m ]"

echo -e "4.alert server node config hosts:[ \033[1;32m ${alertServer} \033[0m ]"

echo -e "5.api server node config hosts:[ \033[1;32m ${apiServers} \033[0m ]"

# all server check state

echo -e '\n'

echo "====================== dolphinscheduler server status ============================="

firstColumn="node server state"

echo $firstColumn

echo -e '\n'

workersGroup=(${workers//,/ })

for workerGroup in ${workersGroup[@]}

do

worker=`echo $workerGroup|awk -F':' '{print $1}'`

workerNames+=($worker)

done

StateRunning="Running"

# 1.master server check state

mastersHost=(${masters//,/ })

for master in ${mastersHost[@]}

do

masterState=`ssh -p $sshPort $master "cd $installPath/; bash bin/dolphinscheduler-daemon.sh status master-server;"`

echo "$master $masterState"

done

# 2.worker server check state

for worker in ${workerNames[@]}

do

workerState=`ssh -p $sshPort $worker "cd $installPath/; bash bin/dolphinscheduler-daemon.sh status worker-server;"`

echo "$worker $workerState"

done

# 3.alter server check state

alertState=`ssh -p $sshPort $alertServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh status alert-server;"`

echo "$alertServer $alertState"

# 4.api server check state

apiServersHost=(${apiServers//,/ })

for apiServer in ${apiServersHost[@]}

do

apiState=`ssh -p $sshPort $apiServer "cd $installPath/; bash bin/dolphinscheduler-daemon.sh status api-server;"`

echo "$apiServer $apiState"

done

master-server命令、配置和依赖存放目录:

类似的alert-server、worker-server 、api-server、standalone-server基本目录结构以及功能都是一样的,这里以Master-Server为例子讲解。

master-server启动脚本,注释与描述如下:

BIN_DIR=$(dirname $0)

DOLPHINSCHEDULER_HOME=${DOLPHINSCHEDULER_HOME:-$(cd $BIN_DIR/..; pwd)}

# 生效DolphinScheduler配置目录

source "$DOLPHINSCHEDULER_HOME/conf/dolphinscheduler_env.sh"

# java启动参数

JAVA_OPTS=${JAVA_OPTS:-"-server -Duser.timezone=${SPRING_JACKSON_TIME_ZONE} -Xms4g -Xmx4g -Xmn2g -XX:+PrintGCDetails -Xloggc:gc.log -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=dump.hprof"}

if [[ "$DOCKER" == "true" ]]; then

JAVA_OPTS="${JAVA_OPTS} -XX:-UseContainerSupport"

fi

# 启动MasterServer

java $JAVA_OPTS \

-cp "$DOLPHINSCHEDULER_HOME/conf":"$DOLPHINSCHEDULER_HOME/libs/*" \

org.apache.dolphinscheduler.server.master.MasterServer

master-server配置文件,其实就跟普通的SpringBoot项目配置差不多,详情如下 :

spring:

banner:

charset: UTF-8

application:

name: master-server

jackson:

time-zone: UTC

date-format: "yyyy-MM-dd HH:mm:ss"

cache:

# default enable cache, you can disable by `type: none`

type: none

cache-names:

- tenant

- user

- processDefinition

- processTaskRelation

- taskDefinition

caffeine:

spec: maximumSize=100,expireAfterWrite=300s,recordStats

datasource:

driver-class-name: org.postgresql.Driver

url: jdbc:postgresql://127.0.0.1:5432/dolphinscheduler

username: root

password: root

hikari:

connection-test-query: select 1

minimum-idle: 5

auto-commit: true

validation-timeout: 3000

pool-name: DolphinScheduler

maximum-pool-size: 50

connection-timeout: 30000

idle-timeout: 600000

leak-detection-threshold: 0

initialization-fail-timeout: 1

quartz:

job-store-type: jdbc

jdbc:

initialize-schema: never

properties:

org.quartz.threadPool:threadPriority: 5

org.quartz.jobStore.isClustered: true

org.quartz.jobStore.class: org.quartz.impl.jdbcjobstore.JobStoreTX

org.quartz.scheduler.instanceId: AUTO

org.quartz.jobStore.tablePrefix: QRTZ_

org.quartz.jobStore.acquireTriggersWithinLock: true

org.quartz.scheduler.instanceName: DolphinScheduler

org.quartz.threadPool.class: org.quartz.simpl.SimpleThreadPool

org.quartz.jobStore.useProperties: false

org.quartz.threadPool.makeThreadsDaemons: true

org.quartz.threadPool.threadCount: 25

org.quartz.jobStore.misfireThreshold: 60000

org.quartz.scheduler.makeSchedulerThreadDaemon: true

org.quartz.jobStore.driverDelegateClass: org.quartz.impl.jdbcjobstore.PostgreSQLDelegate

org.quartz.jobStore.clusterCheckinInterval: 5000

registry:

type: zookeeper

zookeeper:

namespace: dolphinscheduler

connect-string: localhost:2181

retry-policy:

base-sleep-time: 60ms

max-sleep: 300ms

max-retries: 5

session-timeout: 30s

connection-timeout: 9s

block-until-connected: 600ms

digest: ~

master:

listen-port: 5678

# master fetch command num

fetch-command-num: 10

# master prepare execute thread number to limit handle commands in parallel

pre-exec-threads: 10

# master execute thread number to limit process instances in parallel

exec-threads: 100

# master dispatch task number per batch, if all the tasks dispatch failed in a batch, will sleep 1s.

dispatch-task-number: 3

# master host selector to select a suitable worker, default value: LowerWeight. Optional values include random, round_robin, lower_weight

host-selector: lower_weight

# master heartbeat interval

heartbeat-interval: 10s

# master commit task retry times

task-commit-retry-times: 5

# master commit task interval

task-commit-interval: 1s

state-wheel-interval: 5

# master max cpuload avg, only higher than the system cpu load average, master server can schedule. default value -1: the number of cpu cores * 2

max-cpu-load-avg: -1

# master reserved memory, only lower than system available memory, master server can schedule. default value 0.3, the unit is G

reserved-memory: 0.3

# failover interval, the unit is minute

failover-interval: 10m

# kill yarn jon when failover taskInstance, default true

kill-yarn-job-when-task-failover: true

server:

port: 5679

management:

endpoints:

web:

exposure:

include: '*'

endpoint:

health:

enabled: true

show-details: always

health:

db:

enabled: true

defaults:

enabled: false

metrics:

tags:

application: ${spring.application.name}

metrics:

enabled: true

# Override by profile

---

spring:

config:

activate:

on-profile: mysql

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/dolphinscheduler

username: root

password: root

quartz:

properties:

org.quartz.jobStore.driverDelegateClass: org.quartz.impl.jdbcjobstore.StdJDBCDelegate

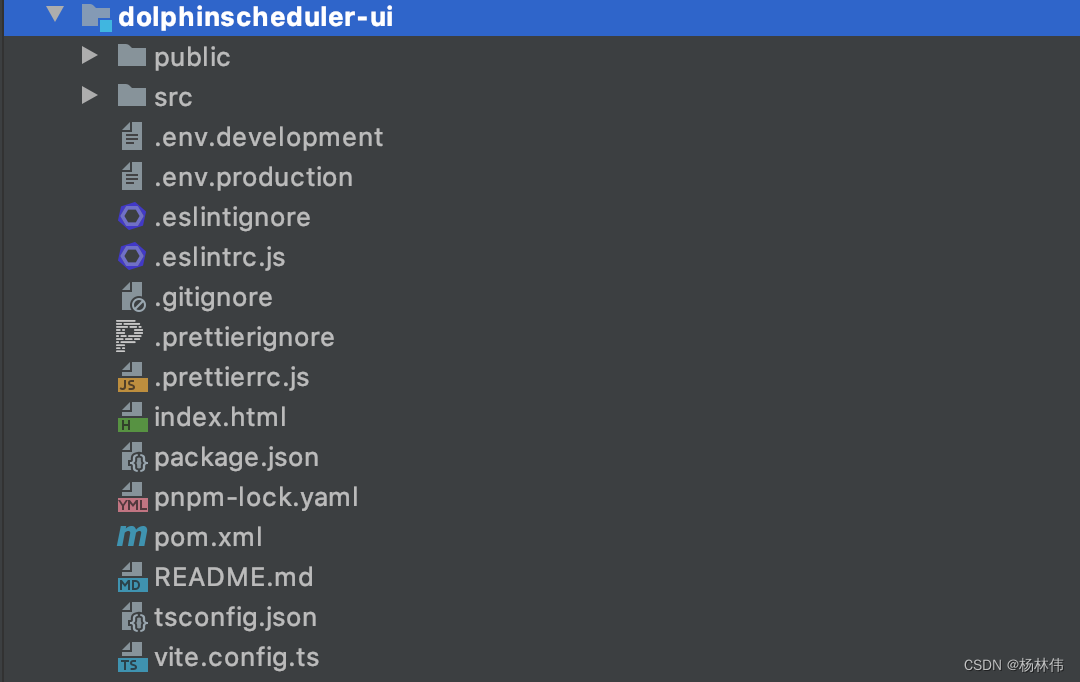

前端WEB资源目录,其实就是前端vue项目工程:

本文主要讲解了DolphinScheduler的相关配置,希望能帮助到大家,本文完!